Of the many variations on Martin Niemoller’s famous ‘First they came for…’ speech I’ve seen kicking around the Internet none, so far as I can recall, has ever started with the line ‘First they came for the sex offenders”.

As population sub-groups go, it’s difficult to think of many that are less likely to attract any significant levels of public sympathy with ratcheting up the ante to the level of prolific serial killers and murderous despots but nevertheless there are still times when it necessary to speak out on behalf of the basic rights of people that one is instinctively inclined to despise, not least when those rights are to be unjustly and unfairly threatened by the state, as will shortly be happening in the UK if this latest idiotic Ministry of Justice (MoJ) scheme is subjected to careful scrutiny:

MPs are expected to clear the way for the introduction of compulsory lie detector tests to monitor convicted sex offenders across England and Wales from next January.

The national rollout of US-style mandatory polygraph tests for serious sex offenders who have been released into the community after serving their prison sentence follows a successful pilot scheme. The trial was carried out from 2009-11 in two Midlands probation areas and found that offenders taking such tests were twice as likely to tell probation staff they had contacted a victim, entered an exclusion zone or otherwise breached terms of their release licence.

Continuing concerns about the reliability of the tests and misinterpretation of the results mean they still cannot be used in any court in England and Wales.

But it is expected that the compulsory polygraph tests will be used to monitor the behaviour of 750 of the most serious sex offenders, all of whom have been released into the community after serving a sentence of at least 12 months in jail.

Clearly, the British state has learned nothing at all from its last disastrous flirtation with ‘lie detection’ technologies, which saw the Department of Work and Pensions piss more than £2 million of public money down the drain trialling a ‘Voice Risk Analysis’ system on benefits claimants that speech scientists had already found to be wholly lacking in both scientific validity and reliability.

That is, perhaps, entirely unsurprising when one considers the craven and intellectual dishonest manner in which the DWP chose to avoid stating the obvious conclusion in its own published evaluation of the trial, i.e. that the system it tested is a scientifically fraudulent crock of shit; a decision which one strongly suspects was driven more by commercial considerations than anything else and one that has shamefully allowed it’s commercial partner in these trials, Capita, to continue to market the system to at least some of the local authorities for which it currently provides outsourced services.

Okay, so what we’re dealing here isn’t the use of a so-called ‘voice risk/stress’ detection systems but rather a conventional polygraph, which at least has the virtue of detecting something tangible in terms of physiological indicators of stress/arousal, i.e. changes in blood pressure, pulse, respiration and skin conductivity/resistivity but beyond that there is a fundamental problem when we try to make the leap from identifying physiological symptom of stress or arousal to ascribing those symptoms to a specific cause.

Polygraph Accuracy. Almost a century of research in scientific psychology and physiology provides little basis for the expectation that a polygraph test could have extremely high accuracy. The physiological responses measured by the polygraph are not uniquely related to deception. That is, the responses measured by the polygraph do not all reflect a single underlying process: a variety of psychological and physiological processes, including some that can be consciously controlled, can affect polygraph measures and test results. Moreover, most polygraph testing procedures allow for uncontrolled variation in test administration (e.g., creation of the emotional climate, selecting questions) that can be expected to result in variations in accuracy and that limit the level of accuracy that can be consistently achieved.

That assessment comes from what is still, ten years on from publication, the definitive scientific review of the use, accuracy and validity of polygraph technology: “The Polygraph and Lie Detection” (pdf, 1.6Mb), a report in published in 2003 by the US National Research Council, which is the working arm of the US National Academy of Sciences.

In dealing with other research fields I would ordinarily suggest that a ten-year old report should be approached with a degree of caution as one would normally expect things to have moved on in terms of both the quality of research and the evidence base since the report was published. However, in this case that’s not really an issue for reasons that the report itself notes:

– The bulk of polygraph research can accurately be characterized as atheoretical. The field includes little or no research on a variety of variables and mechanisms that link deception or other phenomena to the physiological responses measured in polygraph tests.

– Research on the polygraph has not progressed over time in the manner of a typical scientific field. Polygraph research has failed to build and refine its theoretical base, has proceeded in relative isolation from related fields of basic science, and has not made use of many conceptual, theoretical, and technological advances in basic science that are relevant to the physiological detection of deception. As a consequence, the field has not accumulated knowledge over time or strengthened its scientific underpinnings in any significant manner.

For any research field those are pretty damning conclusions and although further has been conducted during that period, almost of all it has continued to dodge the key, and very basic, scientific questions posed by the NRC in its report in favour conducting studies which focus squarely on trying to validate the use of polygraph technologies in practical settings ranging from the management of sex offenders to pre-employment screening, etc. Skeptics, in particular, might well note a marked similarity between the general research methods adopted by polygraph researchers and those adopted by researchers studying so-called alternative medicines and therapies inasmuch as both go to considerable lengths to avoid tackling the most basic questions of scientific validity in favour of producing studies which aim to provide evidence of efficacy or effectiveness, most of which is of uniformly poor quality and subject to numerous, and often quite obvious, sources of bias and confounding.

That is not to say that polygraphs are a total washout like their voice-based counterparts, which are pure pseudoscience. Rather, as the report notes:

– The scientific base for polygraph testing is far from what one would like for a test that carries considerable weight in national security decision making. Basic scientific knowledge of psychophysiology offers support for expecting polygraph testing to have some diagnostic value, at least among naive examinees. However, the science indicates that there is only limited correspondence between the physiological responses measured by the polygraph and the attendant psychological brain states believed to be associated with deception – in particular, that responses typically taken as indicating deception can have other causes.

To be clear, in very carefully controlled laboratory experiments, polygraphs have been shown to be capable of detecting deception at levels greater than chance but some way short of perfection. Nevertheless it remains the case that the stress/arousal responses that polygraph examiners take as being indicative of deception can have other causes and it is simply not possible to make an accurate determination of the actual cause of a specific response in a specific individual. There are, therefore, serious questions not just of reliability but of ecological validity which place severe limits on the extent to which laboratory studies can be taken as credible evidence in support of the use of polygraphs in real world settings, some of the reasons for which are also outlined in the report:

– The accuracy of polygraph tests can be expected to vary across situations because physiological responses vary systematically across examinees and social contexts in ways that are not yet well understood and that can be very difficult to control. Basic research in social psychophysiology suggests, for example, that the accuracy of polygraph tests may be affected when examiners or examinees are members of socially stigmatized groups and may be diminished when an examiner has incorrect expectations about an examinee’s likely innocence or guilt. In addition, accuracy can be expected to differ between event-specific and screening applications of the same test format because the relevant questions must be asked in generic form in the screening applications. Accuracy can also be expected to vary because different examiners have different ways to create the desired emotional climate for a polygraph examination, including using different questions, with the result that examinees’ physiological responses may vary with the way the same test is administered. This variation may be random, or it may be a systematic function of the examiner’s expectancies or aspects of the examiner-examinee interaction. In either case, it places limits on the accuracy that can be consistently expected from polygraph testing.

In broad terms, the ‘results’ of a polygraph test are based entirely on inferences made by the examiner based on the test subject’s measured reaction to specific questions, i.e. it is assumed that if the test subject exhibits signs of physiological stress when asked to answer a specific question then this indicates that they have given a deceptive answer but that assumption is not necessarily always a valid one. It is equally possible for a test subject to become stressed/aroused during a test simply because they are offended or angered by the mere fact of their honesty being questions, or at the tone or manner in which a question is asked, or because they find the examiner and/or the testing procedure intimidating or even because their mind is actually on something completely unrelated to the test, which may or may not have been triggered by the testing procedure – and there is no way of accurately or reliably distinguishing the physiological stress/arousal responses generated by any of the those alternative causes from those generated by lying.

Before pressing on to look at specific issues relating to the use of polygraphs in the management of sex offenders, including the results of the ostensibly successful UK pilot mentioned by the Guardian there is one further general issue that requires careful consideration, that of the bogus pipeline effect:

There is no scientific evidence on the ability of the polygraph to elicit admissions and confessions in the field. However, anecdotal reports of the ability of the polygraph to elicit confessions are consistent with research on the “bogus pipeline” technique (Jones and Sigall, 1971; Quigley-Fernandez and Tedeschi, 1978; Tourangeau, Smith, and Rasinski, 1997).

In bogus pipeline experiments, examinees are connected to a series of wires that are in turn connected to a machine that is described as a lie detector but that is in fact nonfunctional. The examinees are more likely to admit embarrassing beliefs and facts than similar examinees not connected to the bogus lie detector. For example, in one study in which student research subjects were given information in advance on how to respond to a classroom test, 13 of 20 (65 percent) admitted receiving this information when connected to the bogus pipeline, compared to only 1 of 20 (5 percent) who admitted it when questioned without being connected (Quigley-Fernandez and Tedeschi, 1978).

Admissions during polygraph testing of acts that had not previously been disclosed are often presented as evidence of the utility and validity of polygraph testing. However, the bogus pipeline research demonstrates that whatever they contribute to utility, they are not necessarily evidence of the validity of the polygraph. Many admissions do not depend on validity, but rather on examinees’ beliefs that the polygraph will reveal any deceptions. All admissions that occur during the pretest interview probably fall into this category. The only admissions that can clearly be attributed to the validity of polygraph are those that occur in the post-test interview in response to the examiner’s probing questions about segments of the polygraph record that correctly indicated deception. We know of no data that would allow us to estimate what proportion of admissions in field situations fall within this category.

Even admissions in response to questions about a polygraph chart may sometimes be attributable to factors other than accurate psycho-physiological detection of deception. For example, an examiner may probe a significant response to a question about one act, such as revealing classified information to an unauthorized person, and secure an admission of a different act investigated by the polygraph test, such as having undisclosed contact with a foreign national. Although the polygraph test may have been instrumental in securing the admission, the admission’s relevance to test validity is questionable. To count the admission as evidence of validity would require an empirically supported theory that could explain why the polygraph record indicated deception to the question on which the examinee was apparently nondeceptive, but not to the question on which there was deception.

There is also a possibility that some of the admissions and confessions elicited by interrogation concerning deceptive-looking polygraph responses are false. False confessions are more common than sometimes believed, and standard interrogation techniques designed to elicit confessions — including the use of false claims that the investigators have definitive evidence of the examinee’s guilt — do elicit false confessions (Kassin, 1997, 1998). There is some evidence that interrogation focused on a false-positive polygraph response can lead to false confessions. In one study, 17 percent of respondents who were shown their strong response on a bogus polygraph to a question about a minor theft they did not commit subsequently admitted the theft (Meyer and Youngjohn, 1991).

As with deterrence, the value of the polygraph in eliciting true admissions and confessions is largely a function of an examinee’s belief that attempts to deceive will be detected and will have high costs. It likely also depends on an examinee’s belief about what will be done with a “deceptive” test result in the absence of an admission. Such beliefs are not necessarily dependent on the validity of the test.

The Polygraph and Lie Detection, p55-56

If you’ll forgive the lengthy quotation, a clear understanding of the nature of the bogus pipeline effect and it’s implications for field studies that purport to validate the use of polygraphs in managing sex offenders is crucial to everything else that follows because it accounts for the majority of ‘clinically significant disclosures’ recorded in the evaluation of the MoJ pilot study, as indicated by this table:

For the record, a clinically significant disclosure in this study mean the disclosure of one or more current or historical behaviours associated a subject’s pattern of offending and includes any of the following:

– Thoughts, feelings and attitudes (e.g. abusive fantasies and desires)

– Sexual behaviour (e.g. use of pornography)

– Historical information (e.g admitted a previously unknown offence)

– Changes of circumstance/risky behaviour (e.g. increased access to children, contact with other sex offenders, etc.)

In all, offenders in the polygraph group made, on average, a little over twice as many clinically significant disclosures as those in the control group, with 57.3% of those disclosures being directly elicited by the polygraph sessions with a further 5.4% being made in routine supervision in circumstances that led their Offender Manager to the perceive those disclosures as being related to the polygraph sessions.

Overall, therefore, 74.7% of the disclosures identified in the study as being directly or indirectly attributable to the use of the polygraph were made either prior to or during a test, i.e. as a result of the bogus pipeline effect. As for the remaining 25.3% of disclosures that we made either during a post-test interview or in supervision following a test, we have no way of knowing what proportion of these represent the successful detection of an attempted deception by the polygraph examiner given what the NRC report has to say both about the possibility of the test prompting the disclosure of information that is relevant to the study, i.e. a clinically significant disclosure but unrelated to the question which elicited a stress response during the test and the possibility, in not likelihood of the test prompting false disclosures.

One of the key problems with sex offender research, generally, is the context in which it almost always conducted, i.e. either in prison and/or in a therapeutic or offender management setting, which can itself prompt offenders to make false or inaccurate disclosures in the belief that telling a psychiatrist or probation officer what they want to hear will help their case for parole or lead to a relaxation in their licence conditions. Treatment programmes into which at least some sex offenders are enrolled, either in prison or following their release on licence, tend to require complete honesty as a precondition of successfully completing the programme while in routine supervisions with probation officers some degree of voluntary disclosure of potentially risky behaviours or changes in circumstances can assist the offender in convincing their supervisor that they’re making a sincere effort to avoid/prevent any further reoffending…. but only to a point.

As should be obvious, it is not in an offender’s interest to disclose information that may have a significant adverse effect on the chances of parole or, if they’re already on licence, information that might well lead to they’re being recalled to prison, so although the system works to incentivise disclosures there are trade-offs involved when it comes to exactly what a particular offender may or may not be willing to disclose. They are unlikely to disclose information that suggests that continue to pose a serious risk to the public or that indicates that they have continued to offend after being released on licence and may seek to cover themselves by fabricating accounts of relatively trivial infractions as a means of lulling their psychiatrist or probation officer into a false sense of security in an effort to deflect attention away from much more serious risks/behaviours.

Irrespective of how disclosures are obtained, either voluntarily or by using a polygraph, the reliability of any information disclosed by an offender is likely to be questionable at best, not least because the veracity of the information that is disclosed will often be extremely difficult, if not impossible to verify. Clearly, if an offender admits to having had abusive fantasies and desires there is no independent empirical means by which the truthfulness of such a disclosure can be established but even if a disclosure provides information that could, at least in principle, be investigated and independently corroborated, as would be the case with the disclosure of previously unknown offence, there is no guarantee that the offender will provide sufficient information about that offence to enable an investigation to take place or that the information that is given is necessarily accurate. There are any number of ways in which an offender can disclose a previously unknown offence while making impossible for the truth, or otherwise, of their disclosure to be investigated and/or corroborated, not least because a very high proportion of sexual offences routinely go unreported. They can choose to withhold information that might make it possible to identify a victim or provide a deliberate vague or misleading description of the victim and/or the circumstances in which the offence took place, changing the date or location in order to misdirect any attempts made to verify their story.

Taken together, the inherent problems that those involved in treatment and management of sex offenders face when it comes to validating the accuracy of disclosures made by offenders makes a complete mockery of the one of more common arguments put forward by proponents of the use of polygraphs in these settings, i.e. that it enables them to obtain far more information about offenders, their history of offending and their overall pathology than would be the case were they to rely solely on voluntary disclosures made during routine treatment/supervisions as any additional information elicited by way of the use of polygraph is only of genuine value if that information is accurate, truthful and reliable. If it isn’t, and as already noted it will often be the case that the accuracy of information obtained via polygraph sessions will often be impossible to verify, then we run headlong into the age-old problem ‘garbage in, garbage out’.

Needless to say, the evaluation of the MoJ pilot make no real effort to address the problems inherent in assessing the accuracy, reliability and truthful of disclosures, relying instead on ‘evidence’ from self-report interviews with offenders (see appendix 6 for interview questions) who took part in the pilot using a sample of just 15 of the offenders in the polygraph group and 10 from the control group:

Several statements indicated that the polygraph did not provoke more discussion with offender managers, and some statements indicated that offenders were not happy with a machine that implied that they had not been truthful. Other claims, however, indicated that the polygraph made them more honest with their offender manager and that being tested resulted in more open discussions. Nearly half the offenders admitted making disclosures during the polygraph session, and most of these admitted they would not have done so if they were not being tested. The same number had not made any disclosures (one was unclear). Even though many comments reflected offenders’ distrust of the polygraph, several statements indicated offenders’ beliefs that the polygraph should be used with all offenders. Some stated that they had met sexual offenders who were devious and that the polygraph would be useful for these people.

Given the circumstances in which the pilot conducted, perhaps the only thing one can say in the response to the statement that some offenders claimed that the polygraph made them ‘more honest’ with their offender manager is that they would say that wouldn’t they? After all, it would hardly be in their best interests to admit to having given false or inaccurate information and risk finding themselves placed under more stringent supervision or even being recalled to prison as a result.

Another key issue inherent in the use of polygraphs in the management of treatment of sex offenders that receives only cursory consideration in the MoJ evaluation report are the problems that arisen from retesting offenders repeatedly, as set out here in an excellent 2008 paper by Meijer et al., which unsurprisingly does not appear amongst the references cited in that report:

The second factor that threatens CQT accuracy in PCSOT is repeated testing of the same offender. This can deteriorate the test outcome for a number of reasons. To begin with, it is well known that physiological responsivity decreases upon repeated presentation of the same stimulus (i.e., habituation; Andreassi, 2000). Thus, the “guilty” sex offender may not show a marked physiological response to the relevant question after having been repeatedly confronted with it, thereby raising the probability of a false negative test outcome. Repeated testing is also likely to reduce the emotionally provocative loading of the comparison question, thereby increasing the probability of a false positive test result in “innocent” sex offenders. Furthermore, repeated testing increases the probability of the effective use of countermeasures by the offender. Countermeasures refer to everything the examinee can do in order to alter the test outcome (Honts & Amato, 2002). The examinee might use physical countermeasures such as biting of the tongue in order to create a physical response to the comparison question. Alternatively, he or she might try to alter physiological responses using mental countermeasures, by thinking about something exciting during the comparison question, for example. Several studies have shown that mental countermeasures are especially problematic because they are not easily detected by the examiner (for a review, see Honts & Amato, 2002). Honts (2004) has suggested that countermeasures may only be effective if the examinee is able to practice them. Clearly, repeated testing not only familiarizes the examinee with the procedure, but also gives the examinee the opportunity to practice countermeasures.

* Sorry about the acronyms, there. ‘CQT’ is ‘Comparison Question Technique’, the specific type of polygraph testing used with sex offenders in which physiological responses elicited during the test are compared to those obtained by control questions administered at the beginning to establish a baseline measurement for the test subject. AS for ‘PCSOT’ that’s ‘Post Conviction Sex Offender polygraph Testing’, which should be self-explanatory.

Of the issues outlined by Maijer et al. only that of habituation gets any mention in the MoJ report:

– There is a trend for medium- and high-/very high-risk offenders to be more likely to receive a DI result on their first test, compared to low-risk offenders. However, the percentage of medium- and high-/very high-risk offenders receiving a DI result decreases at test 2, when offenders have gained experience of polygraph testing. The numbers of test 3 polygraphs were too small to draw any meaningful conclusions. This may reflect that offenders become desensitised to the testing experience over time, or that they are more truthful the longer they are on licence in the community.

– Although there is a trend for the average number of CSDs made in the polygraph session to decline as offenders experienced more polygraph testing (0.98 CSDs at test 1, 0.77 CSDs at test 2, 0.47 at test 3), this difference did not reach statistical significance. During a first test, statistically, offenders made significantly more CSDs following a DI result compared with an NDI or inconclusive result (INC). This difference dissipated on subsequent tests when offenders received fewer DI test results.

So there is at least some recognition of the possibility of habituation affecting result on subsequent tests but no mention whatsoever of the possibility of the use of countermeasures as a source of confounding.

The results to which this section of the report relate are set out in the following tables:

The first thing to note here is there is quite clearly a problem with the data reported in Table 3.2 for offenders who underwent a third test. Although the text in the report immediately preceding this table states that 74 offenders in the polygraph group underwent three or more tests – 61 were tested three times, 11 on four occasions and two had five tests, one of whom went on to have a sixth test – the number of tests reported for test 3 adds up to 84 (or 78 if you exclude from consideration those for whom the test was not completed. And if we look just at the figures for ‘Deception Indicated’ then 19 out of 84 tests is not 9.6% but 22.6%, an increase of 4.4 percentage points over the results of the second test, albeit from a smaller number of test subjects.

There is also a noticeable doubling in the percentage of incomplete tests at both the second test and again at the fourth test which could be nothing more than a statistical artefact arising from the fall in the number of offenders tested at each repetition but equally could indicate offenders withdrawing from the pilot after receiving a false positive result in a previous test. It is also noticeable that the report does not provide any figures whatsoever for the numbers of test subjects who generated a ‘Deception Indicated’ result without making any kind of relevant disclosure either during or following the test, even though there were six reports of the test ‘lying’ recorded in the qualitative data from the sample of fifteen offenders that were interviews about their perceptions of and feeling about the having undergone polygraph testing.

This brings us to a key issue that the evaluation report almost completely ignores, that of the risk of both false positives and false negatives particular when offenders are subjected to repeated testing.

For this next bit we need to delve into the realms of diagnostic accuracy, which is measured, at least in part, in terms of the sensitivity and specificity of of the test that being used. For example, it we consider the case of a common diagnostic test used in medicine such as mammography, which is used to screen women for signs of breast cancer, then overall the most recent data from US Breast Cancer Surveillance Consortium indicates that when to screen for breast cancer, mammography has a sensitivity of 85% and a specificity of 90.6%.

What this means in practice is that, based on the specificity of the test, for every 100 women you screen for breast cancer who actually have the disease, a mammography test will correctly identify 85, giving a [true] positive result, while the remaining 15 will receive a [false] negative result, i.e. the test will miss the fact that they have breast cancer. That may, at first sight, seem a worryingly low figure but in the majority of cases the reason that a mammography test will fail to pick up a cancer is because the tumour is, at that stage, too small to be detected reliably by this particular test and as most breast cancers develop relatively slowly to begin with, it’s likely that the cancer will be picked up at the patient’s next screening appointment and a stage when it is still very much amenable to successful treatment.

Likewise, when we look at the sensitivity of the test (90.6%), what this means that for every 100 women you screen for breast cancer who don’t have the disease, the test will correct identify either 90 or 91, giving a [true] negative result while either 9 or 10 will receive a [false] positive result and will be incorrectly diagnosed as having breast cancer. Again this is not necessarily anything to worry about as any positive results generated by mammography tests will typically be subject to further confirmatory testing, usually by way of a tissue biopsy, allowing women who did received a false positive to be be identified before they undergo any further invasive treatment.

Okay, so overall mammography is some way short of being a perfect test for breast cancer and will generate both false positives and false negative but when you take into account the fact that further confirmatory test are available which can strip out the majority of false positive and that the consequence for most women of a single false negative are likely to be relative minor unless they’re unlucky enough to develop a particular aggressive cancer – which is, fortunately, quite rare – then it all adds up to mammography being a pretty decent method of conducting breast cancer screening and one that’s somewhat more effective than any of the currently available alternatives.

So that’s pretty much how we measure the diagnostic accuracy of a test in terms of its sensitivity and specificity and, in general terms, the accuracy of polygraph testing can be evaluated and assessed in much the same terms, albeit that we have to take into account that, unlike mammography, there are typically no backup tests we can run to reliably establish the accuracy of polygraph testing in the field, e.g. when its used to screen sex offenders, beyond relying on self-report information supplied by the test subjects who, as we’ve already seen, may not always be entirely truthful. Over the years, a number of studies have been published which provide estimates for the accuracy of polygraph testing, as reported here in Meijer et al. (2008):

A large number of studies addressed the accuracy rates of the CQT in specific-incident testing (e.g., tests in criminal investigations). Mock crime study estimates range from 74-80% for guilty participants, and 63-66% for innocent participants (Ben Shakhar & Furedy, 1990; Kircher, Horowitz, & Raskin, 1988). The false positive ratio (i.e., innocent examinees classified as guilty) was estimated at 12-15%, and the false negative ratio (i.e., guilty examinees classified as innocent) was 7-8%. Field study estimates of the accuracy of the CQT are slightly higher, ranging from 84-89% for guilty, and 59-72% for innocent suspects, with estimated false positive rates of 12-23% and false negative rates of 1-13% (Ben Shakhar & Furedy, 1990; Raskin & Honts, 2002).

Recently, the National Research Council (NRC, 2003) evaluated the validity of the CQT for screening purposes. These tests are used in the United States to screen job applicants and monitor employees of government agencies whose work involves security risks (e.g., FBI applicants or nuclear scientists; Krapohl, 2002). When reviewing the evidence, the NRC concluded that there were no high quality studies on the use of the CQT for screening purposes. Therefore, the council based its review on studies that addressed the validity of the CQT in specific-incident testing. Reviewing this evidence, the NRC noted that most studies do not reach high levels of scientific quality. For example, nearly all studies are based on naive participants, threatening ecological validity. Still, the Council selected 37 laboratory studies and 7 real-life field studies that met the minimum criteria for its analysis. The Council concluded that specific-incident polygraph tests can discriminate lying from truth telling at rates well above chance, though well below perfection. Furthermore, the Council pointed out that accuracy for screening purposes is almost certainly lower than what can be achieved for specific-incident testing. The latter is especially relevant because the use of the CQT in PCSOT predominantly involves questioning sex offenders about unknown incidents, thus bearing a stronger resemblance to screening than to specific incidence testing (Abrams & Abrams, 1993). The main difference between specific incidence and screening tests is that during a specific-incident polygraph test, a suspect is questioned about a known incident, such as a murder, theft or arson. In a screening test, however, someone is questioned about incidents of which it is unknown whether they have taken place, such as disclosing classified information.

Okay, so the data given there comes with some pretty hefty caveats given the noted difference the use of polygraph for specific-incident testing when compared to their use for screening purposes, but in the next section of the paper, Meijer et al, provide the following information about studies conducted specifically on the accuracy of polygraph testing in the screening of sex offenders:

We found no studies that made a serious attempt to estimate the validity of the CQT in PCSOT. The only studies that tried to estimate it limited themselves to offenders’ self-reports of test accuracy. Kokish, Levenson, and Blasingame (2005), for example, collected self-report data from 95 sex offenders who underwent a total of 333 tests. Eighteen offenders (19%) reported having been incorrectly labelled deceptive, and 6 (6%) claimed they had incorrectly been found truthful. Similar statistics were reported by Grubin and Madsen (2006). They collected self-report data from 126 offenders which completed a total of 263 tests. Of these 126 offenders, 27 (21%) reported having been incorrectly found deceptive, and 6 (5%) reported they had been wrongly labelled truthful.

Although such results might look encouraging, these studies suffer from a major methodological pitfall, namely sampling bias. Participation in a polygraph treatment program is voluntary. In a prospective study by Grubin et al. (2004), only 21 out of the 116 offenders (18%) that were initially approached, completed both polygraph tests that were part of the study. Accuracy rates based upon this small sample of offenders may thus very well be an overestimation. Offenders that were confronted with an incorrect test result may have simply dropped out.

As luck would have it, the 2006 study by Grubin and Madsen was published in the British Journal of Psychology, so I could readily obtain a full copy of the paper, which gives the following information for test accuracy in the study:

Okay, so Grubin & Madsen’s figures for sensitivity and specificity are in the same ballpark as those reported in other polygraph studies and also not that dissimilar to the figures for the use of mammography in breast cancer screening, which may lead you to think that although polygraph testing is far from perfect, if it functions at similar levels of accuracy to tests that we rely on to screen for breast cancer then that’s surely an acceptable degree of error.

Well, no – for starters we have no way of knowing for sure how accurate the self-report data used to assess the accuracy of polygraph tests in this study might be and no other back up test to confirm accuracy. We’re also looking here at data from a very small number of tests, 263 in total which were conducted on just 126 sex offenders and the small number of tests and offenders involved in this study may easily have led it to over-estimate the accuracy of polygraph testing, not least because of the problem of sampling bias. Participation in this particular study was voluntary and the paper notes that 321 offenders were initially approached about taking part in the study of which 176 (54.8%) agreed to take part. Of these, the paper states that 174 provided information about ‘previous polygraph tests’, although 48 reported that they had not had their first polygraph examination but were scheduled to do so, leaving 126 offenders who provided data that could be used assess the accuracy of polygraph testing, which is just 39.2% of the total number of offenders approached to take part in the study.

By comparison, the sensitivity and specificity figures for mammography are based on data from 2.26 million mammography examinations carried out in the US between 2002 and 2006.

We also have to into account the fact that, in the absence of reliable confirmatory tests, the consequences of either a false positive or false negative result are potentially going to be much more serious when its comes to the polygraph testing of sex offenders than will typically be the case for women undergoing mammography screening. In the latter case, a false positive will mean women undergoing further tests and, in all likelihood, and invasive medical procedure – a tissue biopsy – but at least that will determine whether a woman actually has breast cancer or not, while a false negative may result in a delay in woman with breast cancer receiving treatment but unless the cancer is particularly aggressive or a woman is particularly unlucky and has a series of false negatives, such a delay is unlikely to adversely impact on either their treatment options or probability of survival once the cancer is discovered.

When it comes to sex offenders and false positives/negatives in polygraph screening, at the very least a false positive is likely to lead to an offender being subjected to further question and, perhaps even a full interrogation, in an effort to ascertain which their test indicated deception, and there is therefore a risk that this may elicit a false confession as Grubin and Madsen’s paper notes:

Twelve participants (10%) reported making false admissions regarding their behaviour at some stage during a post-conviction polygraph test, of whom only 5 claimed to have been wrongly labelled as being deceptive. The main reasons given for false admissions were the fear of getting in trouble with probation officers in three cases, and feeling pressured by the polygraph examiner in another three cases. Other reasons were wanting to make a good impression, ‘confusion’, ensuring that the test was passed, and wanting to demonstrate commitment to therapy.

If a confession is not forthcoming then it’s possible, if not likely, that the police will be tasked with carrying out an investigation into the offender’s recent activities, which may well prove to be a wild goose chase and a waste of police time and resources.

Ultimately, a false positive, whether it elicits a false confession or not, may result in an offender being subjected to more stringent supervision, being reclassified into a higher risk category or even being recalled to prison on the assumption that their test indicates not only that they may have breached the terms of their release on licence but also, should they stick to their guns and assert that it was the test itself that was wrong, that they’re lying to conceal the fact that they’ve either breached their licence or engaged in activities that might lead to them breaching their licence or possibly even reoffending in the near future.

As for false negatives, where these occur it will mean that the testing has failed to alert the authorities to the fact that an offender has been engaging in activities that might indicate either and increase likelihood of reoffending or even that the they have already begun to re offend. Beyond the potential immediate consequences of such a failure, numerous studies looking at the use of test equipment and procedures in a wide variety of settings have shown that over time people will typically come to increasingly rely on such equipment/procedures to the exclusion of exercising their own judgement, even if they are aware of the fact that a test is fallible and have suspicions that it may have given a false reading/outcome.

This being the case, the fact that MoJ evaluation report includes the following statement in it’s section on the qualitative analysis of Offender Manager’s views of the pilot is somewhat troubling:

Some offender managers stated that supervision improved when polygraph results showed no deception, as it reassured them of the offender’s honesty and gave the offender more confidence as to their understanding of and ability to stick with their licence conditions.

On a realistic appraisal of the evidence relating to the use of polygraphs in screening sex offender any reassurance that those involved in the management of offenders might gain as to the honesty of offender’s can only reasonable be described as misplaced.

Thus far we’ve only looked at polygraph testing in terms of the evidence for its accuracy and reliability in single independent test – although the results from Grubin & Madsen are derived from reports of multiple tests on each individual offender who supplied self report data, which is why were have data for 263 tests from just 126 offenders, they estimates for the accuracy of polygraph screening and the sensitivity and specificity of the test are based on calculations which assume these test to independent, so we can only work with their figures on that basis.

The study reports a sensitivity of 84% and a specificity of 85%, given us a false positive rate of 15% and a false negative rate of 16% for single tests. However, we know that once testing becomes compulsory in the UK, most sex offenders who are made subject to compulsory testing are highly likely to be subjected to regular testing although at what frequency is as yet unknown as the government has yet to put in place any specific regulations relating to use of polygraph testing.

In the US, testing is generally conducted at six monthly intervals, although medium and high risk offenders may be tested more frequently and from the data included in the MoJ evaluation we know both that amongst the offenders who took part in the pilot the average length of sentence was 66 months, i.e. five and half years, so if an average offender were to be released on licence after completing half of their sentence in prison they would spend a further 33 months on licence until the sentence finally expires. Assuming a minimum six-monthly testing regime our hypothetical average offender is likely to be tested on at last six occasions while on licence and may indeed be testing more frequently than – remember, of the offenders that took part in the pilot, which lasted 12 months, 74 were tested on three or more occasions.

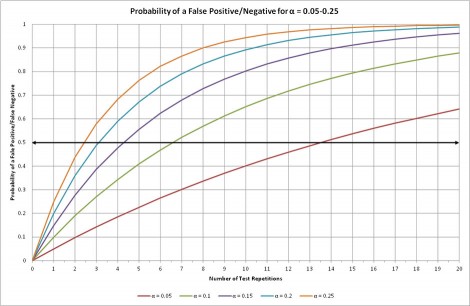

If we repeat the same test over and over again on a test subject whose circumstances and behaviour haven’t changed in the slightest the probability of the test returning either a false positive or false negative will increase with each repetition and the exact probability of such a test generating a false result can be calculated using the formula p=1-(1-α)n, where α is the probability of false positive/negative on a single test and n is the number of repetitions. The following graph shows the probability of repeated testing of an individual test subject generating a false positive/negative for values of α from 0.05 (5%) to 0.25 (25%) in increments of 0.05 over the course of 20 tests:

As you can from the graph, which you can click to embiggen, for a test with a sensitive and specificity of 85% and a 15% rate of both false positives and false negatives, which is as near as damn it the figures provided by Grubin and Madsen, the probability of generating an erroneous test result will exceed 50% by the fifth test and 90% by the fifteenth test. For sex offenders that are serving out long sentences, or those who are subjected testing at shorter intervals by virtue of their being deemed to be at a high risk of offending what this graph tells us that they are highly likely to generate at least one false positive (or possibly negative) due simply to the use of repeat testing, even if they comply in full with the licence conditions and are completely honest during both testing and routine supervision.

This is an issue that the MoJ evaluation completely ignores, as did Grubin and Madsen in their study, despite it being one that has potentially serious implications for the compulsory use of polygraph screening with sex offenders. Consider, for example, the situation a low risk sex offender could find themselves in if a false positive on a polygraph test results in their being recategorised to a high level of risk and subjected to more frequent testing as a result because the increase in the frequency of testing alone will act increase the likely of the same offender generating another false positive result which, in turn, may very well increase the likelihood of their being recalled to prison to serve out the remainder of their sentence.

If participation in polygraph screening is purely voluntary then at least an offender who finds themselves on the wrong end of a false positive result can avoid the risk of further false positives by withdrawing from the screening programme but if screening become compulsory, as the present government intends, then that option will be closed off as any refusal to undergo a test will almost certainly be treated as breach of licence and result in their being recalled to prison.

As offender management systems go, the introduction of compulsory polygraph testing for sex offenders most closely one of the many variant explanations of Catch-22 given in the novel of the same name, specifically the version cited by victims of harassment by the Military Police as being the MP’s explanation of Catch-22:

“Catch-22 states that agents enforcing Catch-22 need not prove that Catch-22 actually contains whatever provision the accused violator is accused of violating.”

None of this would, perhaps, matter so much were there evidence to show that the use of polygraph testing had a positive impact on recidivism and rates of reoffending amongst sex offenders but, as Meijer et al. note:

PCSOT has been used since the 1960s, and has been described as the missing link in preventing recidivism. Going through all relevant scientific databases (i.e., Psychinfo, Medline, Web of Science), however, we could not identify a single study that directly addressed the critical issue of whether PCSOT actually reduces recidivism. There is little reason to assume that PCSOT will have a direct effect on recidivism. Consider, for example, the study by Grubin and colleagues. These authors investigated whether the prospect of a polygraph test would influence offenders’ behaviors. Clearly, the authors expected that the prospects of a polygraph test would result in a reduction in high-risk behavior (Grubin et al., 2004). One-hundred and sixteen convicted sex offenders were approached, of which less than half (n=50) agreed to participate. High-risk behaviors were identified for each individual and two groups were created. One group was told that they would undergo a polygraph test in 3 months, whereas the other group was told their behavior would be reviewed, with no specific reference to the use of the polygraph. In fact, both groups were subjected to a polygraph test 3 months later. Thirty-two of the original 50 offenders reported for the polygraph test. Thirty-one of them (97%) disclosed having engaged in high-risk behavior, with no differences between the polygraph aware and the polygraph unaware group. For a second polygraph test, performed 3 months later, another 11 offenders dropped out. Of the remaining 21 offenders 15 (71%) disclosed high-risk behavior. This study shows that although PCSOT is able to reveal new information in a highly selected sample of motivated sex offenders (18% of the original sample completed both tests), the knowledge of an upcoming polygraph test does not seem to prevent offenders from engaging in high-risk behavior.

Given the lack of credible scientific evidence for the validity, accuracy and efficacy of polygraph testing and the numerous problems with the research that does exist that I’ve outlined in this article, one can easily be forgiven for wondering exactly how proponent of polygraph testing, such as Prof. Donald Grubin, have managed to sell politicians and policy makers the idea that compulsory testing of sex offenders is worth pursuing. The answer to this question, if you’re at all familiar with research published in the field of complementary and alternative medicines and therapies, is one that has a depressing ring of familiarity to it:

Most of the literature that supports post-conviction polygraph testing spends an inordinate amount of time assessing whether offenders, treatment providers, and community supervision officers find it a useful component of a treatment program (Grubin, 2008; Grubin, 2010; Heil et al, 2003). However, the real question is why would it matter whether an offender or provider find polygraph testing useful without evidence of changed behavior? For instance, research has shown that more expensive placebos are perceived to work better than cheaper placebos (Waber, Shiv, Carmon, & Ariely, 2008). The fact remains that both are placebos, so neither the expensive or cheaper variety are useful in treating any condition. Hence, opinions of individuals involved with polygraph testing, being they offenders or providers, do not provide an adequate measure of usefulness nor utility beyond the fact that people perceive them to useful or utile. The real measure of usefulness or utility of any correctional treatment or program is whether it delivers the desired change in whatever behavior it is trying to affect. One can argue against this position, but ultimately, this how correctional treatment programs and interventions are and should be judged. And indeed, the literature on “what works” in correctional treatment stress these behavioral change outcomes as indicative of program effectiveness (Gendreau, 1996; Gendreau, Goggin, Cullen, & Paparozzi, 2002; Gendreau, Smith, & French, 2006; Lowencamp, Latessa, & Smith, 2006; MacKenzie, 2000, 2005, 2007; Polizzi, MacKenzie, & Hickman, 1999)

That’s from a very recent paper (2012) by Jeffrey W Rosky of the University of Central Florida – “The (F)utility of Post- Conviction Polygraph Testing” – and it’s observation that’s certainly relevant to the MoJ evaluation report, which also expends a significant amount of time on assessing whether or not offenders and offender managers find polygraph testing useful/helpful which providing precisely no evidence of changed behaviour.

However, the money shot in Rosky’s paper in the next four paragraphs, which review the evidence for behavioural change:

Which brings us to the main thrust of this article: Does post-conviction polygraph testing reduce further offending? Discussions of increased disclosures, accuracy, habituation, sensitization, and deterrence aside, when the rubber meets the road, does polygraph testing deliver on its promise to reduce criminality and deviance? To put it in the words of a polygraph proponent, “Here, the ‘weight of evidence’ is less heavy” (Grubin, 2008, p. 185). And when Grubin (2008) refers to the weight as less heavy, he is not joking; there are exactly three studies that assess the impact of post-conviction polygraph on subsequent offending.

The first is a study by Abrams and Ogard (1986) compared recidivism rates between a group of 35 offenders on post-conviction polygraph testing and another group of 243 offenders not on post-conviction polygraph testing. They found that the first group had a 2-year recidivism rate of 31% and the second group had a 2-year rate of 74%. In the second study, Edson (1991) found that of 173 sex offenders under community supervision who were required to take a periodic polygraph test, 95% of these offenders did not reoffend within 9 years.

The third study, the most robust out of the three in terms of research design, by McGrath, Cumming, Hoke, and Bonn-Miller (2007) examined 104 sex offenders on post-conviction polygraph testing matched by type of treatment and supervision with 104 sex offenders not on polygraph testing. No significant differences between the two groups were found on age, educational attainment, sex offense type, or risk levels. They then recorded 5-year rates for new sex convictions, new violent (but non-sex) convictions, new nonviolent convictions, field violations, and prison returns. They also matched previous findings by showing increased disclosures of wider prior criminal history for the polygraph group. However, the only statistically significant difference (i.e., p<0.05) in offending they reported was on new violent convictions where the polygraph group had 2.9% or 3 new violent offenses and the nonpolygraph group had 11.5% or 12 new violent offenses. No other statistically significant differences were found including new sexual offenses, which were about even between the two groups.

In short, there is no statistically significant evidence of behavioural change in sex offenders subjected to polygraph testing and the evidence that did emerge from the McGrath et al. (2007) ran contrary to the central premise on which the use of polygraph testing is predicated:

What is more interesting is that although no statistical differences were found between the polygraph and nonpolygraph groups for any new offense, field violations or prison returns, rates were higher in the polygraph group for any new offense (39.4% vs. 34.6%), field violations (54% vs. 47%) and prison returns (47% vs. 39%). Moreover, these differences are practically important7 as the promise of post-conviction polygraph testing is that increases in disclosure of prior offending will allow for better treatment that will reduce risk of future offending and that the polygraph will also serve as a deterrent for field violations and new offending. Even if this sample was too small to find a difference, shouldn’t the nonpolygraph group have higher rates of new offenses, field violations and prison returns? Instead, the evidence goes in the opposite direction.

Oh dear.

For the record, the study by McGrath et al. is referenced in the MoJ evaluation report but, unlike Rosky’s paper, that report has only this to say about its findings:

Despite widespread usage, however, controversy has surrounded the polygraph, as the research used to assess its effectiveness has generally lacked scientific rigour (see McGrath et al, 2007).

One would assume that readers of the evaluation report are being referred here to McGrath et al. for a discussion within the paper of the lack of scientific rigour evident in studies which look at the effectiveness of the polygraph. That would certainly be the academic convention but this statement is also sufficiently ambiguous as to suggest to a lay person that McGrath et al. might easily serve as an example of a study that lacks scientific rigour and prompt them to discount its key conclusions (not least because the likelihood of any politicians or civil servants at the MoJ going to the trouble of reading McGrath et al. in full is vanishingly small).

The results of this study support research findings cited earlier indicating that individuals who have committed sexual offenses and who undergo polygraph compliance testing admit to engaging in previously withheld high risk behaviors and that providers find this information relevant for improving treatment and supervision services. Although it seems logical that these outcomes would lead to lower recidivism rates, the present results do not provide much support for this hypothesis.

On the whole, however, I think Rosky provides a much better summation of the actual evidence:

The overall conclusions that can be drawn by these three studies is that evidence for utility of the polygraph in reducing offending is weakly supported by the first two studies, but this conclusion is undermined by the lack of adequate controls for selection bias, lack of a random sample, and probable confounding in addition to the facts that the Abrams and Ogard (1986) study appeared in Polygraph, which is essentially a trade journal for polygraph examiners and the Edson (1991) study was a technical report never submitted for peer review. Which leaves the only academically peer reviewed study of note, McGrath et al. (2007), and it directly contradicts the utility hypothesis by having nonsignificant but higher rates of new offending, field violations, and prison revocations for the post-conviction polygraph test group versus a control group. Rather than Grubin’s (2008) assertion that the evidence for the post conviction polygraph testing reducing criminal and deviant behavior is less heavy, it is non-existent.

Rosky’s paper concludes with a number of important recommendations of which the are particularly relevant to the manner in which the MoJ’s pilot study has been conducted:

Despite the negative conclusions drawn in this review, it would be remiss to not chart a path that would allow us to amass the needed evidence to effectively judge post conviction polygraph testing’s real utility. With that in mind, first, we need researchers, clinicians, and polygraph examiners to stop selling post-conviction polygraph testing as an effective, evidence-based tool for supervising offenders and start adequately assessing its efficacy and effectiveness with viable outcome measures such as new offenses, revocations, and technical violations. We have correctional agencies with enormous databases containing this information. It would be easy enough to identify, throughout the United States and other countries, a proper sample with differing types of sex offenders with post-conviction polygraph test exposure, match them with a comparable cohort with no exposure, and measure these recidivism outcomes. In addition, we can use propensity score matching with these data to approximate randomized controlled trials which would make this evidence even more compelling (Stuart, 2010). And although it points toward a potential conclusion, simply relying on a single negative study of 208 sex offenders from Vermont is not enough evidence to dismiss post-conviction polygraph testing as an ineffective or counter effective tool. Also claiming that supervisory and treatment personnel and offenders “like it” or find it useful is not evidence because science is not nor should be a popularity contest. Instead, it must winnow out competing hypotheses with compelling evidence of efficacy and effectiveness and in this case, recidivism, violations, and revocations are the only outcomes of interest to demonstrate polygraph testing’s efficacy and effectiveness. And when we find substantial compelling evidence against a hypothesis, no matter how much we want it to be true, the scientific method requires us to dismiss it and adopt the hypothesis with real empirical and theoretical support (Sagan, 1996). That really is the beauty of the scientific method; it does not care whether we like the results, it merely requires that we change our views when we are wrong. We need to be willing to do that with all correctional treatments, including post-conviction polygraph testing.

Second, and echoing NRC (2003), more research—performed by researchers and scientists indifferent and, more importantly, unvested in the success or failure of any polygraph theory or test type—is needed to (a) establish using randomized, controlled trials with appropriate placebo groups what type, if any, of polygraph testing has the most theoretical and empirical support; (b) determine how this type of testing would effectively aid criminal justice agencies in the supervision of offenders in the form of reduced recidivism; (c) assess the impact on accuracy by diseases and mental illnesses related to the physiological processes used in polygraph testing; and (d) if efficacy and effectiveness is found, determine the best way to incorporate these methods into agencies that minimizes adverse events from both false positives and false negatives.

Rosky is absolutely correct. Before we go anywhere near introducing the use of polygraphs into our own criminal justice system there needs to be properly conducted randomly controlled trials that use clear and, as importantly, relevant empirical outcome measures, carried out by researchers who do not have a vested interest in either the success of failure of such trials. Research, in short, that is everything that the MOJ’s pilot study isn’t.

That the MOJ’s pilot falls woefully short what most would consider to be fairly basic standards of research practice and evidence is hardly surprising when one considers that the research itself was carried out by Professor Donald Grubin, Chair of Forensic Psychiatry at Newcastle University’s Institute of Neuroscience and the same Grubin that Rosky justifiable takes a shot at for describing the evidence for utility of polygraph testing as ‘less heavy’ when he should have said ‘non-existent’.

Outside his day job as an academic, Grubin is a board member of the Independent Safeguarding Authority and Scottish Risk Management Authority and a member of the Ministry of Justice Correctional Services Accreditation Panel and the Northumbria MAPPA Strategic Management Board, all of which puts him in a position of considerable influence within the MOJ’s side of the criminal justice system.

He’s also a member of the editorial board of ‘European Polygraph‘, a journal that operates out of the private Andrzej Frycz Modrzewski University, Krakow although, curiously, he hasn’t elected to include any mention that particular gig on his profile page at Newcastle University.

On top of all that, Grubin is also the co-author, with Anthony Beech, of a 2010 editorial in BMJ in which he backs use of chemical castration for sex offenders (and even actual castration) but only if an offender volunteers for it as, in his estimation, that neatly sidesteps any awkward ethical questions about the duty of a doctor to act only in the best interests of his patients. That editorial deservedly drew a stinging rebuke from Giuseppe Vetrugno and Fabio De Giorgio of the Gemeli Clinic, Rome:

It could be objected that, in the case in point, the patients have consented to the treatment. But, while this is technically true, there can be no denying that consent to a pharmacological therapy based on antiandrogenic drugs by an individual suffering from a tumour of the prostate, and not subject to incarceration, is quite a different matter from consent to the same therapy by an inmate who has been presented with the alternative of continued limitation of his personal freedom. It is the barter between “diminished health/freedom” implicit in this and similar proposals that proves offensive to the dignity of the individual offered the alternative: offensive because it constitutes an abuse of the condition of objective inferiority in which the inmate, though convicted of a despicable crime, finds himself.

…

Such a mentality would appear to be at the root of the increasingly frequent calls for more extensive freedom for administering pharmaceuticals, apart from any therapeutic benefits corroborated by medical evidence, to certain categories of individuals, such as inmates. The same panel of studies cited by the authors (1) demonstrates that there is no consensus opinion in the literature on the effectiveness of the various therapeutic protocols proposed; indeed, certain reviews argue that there is no scientific evidence to support of the chemical castration of inmates convicted of sex crimes (4). And so the contention that the treatment works to the advantage of the inmate, the idea that the convicted criminal redeems himself in the eyes of society through a voluntary gesture of reparation – though, in reality, this amounts to nothing less than an (in no way unconstrained) acceptance of mutilation sine die, albeit a merely pharmacological mutilation, as well as the essentially replacement of a punishment handed down by the legal system with a pharmacological punishment consisting of (forced, because there is no alternative) acceptance of the risk of damage to one’s health inherent in treatments with antiandrogenic drugs, all represent pseudo-humanitarian alibis that utilise, under a logic of greater or lesser returns, a form of punishment already questionable in its own right, employing it in the name of a indeterminate collective good.

And I have to agree here with Vertrugno and De Georgio – in fact having read a few of Grubin’s papers, including this 2010 editorial from the Journal of the American Academy of Psychiatry and the Law and this brief paper that I found (via Google) on the website of the National Organisation for the Treatment of Sexual Abusers (NOTA), I have to say that I find Grubin’s approach to both the ethical issues involved in dealing with sex offenders and the evidence as it relates to the use of polygraphs to be easily, as disturbingly, as glib, superficial and riddled with obvious biases as anything you’ll find published in support of homoeopathy.

That last short paper for NOTA appears, from its document properties, to have been written only last year and yet, in complete contrast to the evidence that I’ve put forward in this article, Grubin’s paper puts up the following conclusions in regards to the use of polygraph testing:

Polygraph testing of convicted sex offenders has its critics. There are arguments about its validity, usefulness and ethics (British Psychological Society, 2004; Cross & Saxe, 2001). A published debate airing all of the issues in respect of sex offender testing can be found in Grubin (2008) and Ben-Shakhar (2008).

The evidence base is supportive of PCSOT in respect of its ability to contribute to sex offender treatment and supervision, but a proper cost-benefit evaluation of PCSOT still remains to be done.

Polygraph testing should only be administered by PCSOT trained examiners whose work is quality controlled. Tests should always be visually recorded, and reports of the tests provided.

To which one can only respond… WTF???? – and note that although this ‘paper’ might have been written prior to the publication of Rosky, it certainly doesn’t pre-date that of Meijer et al. which, again, appears to have studiously ignored.

As I mentioned at the outset, it is hard to imagine a group that is less likely to attract any kind of public sympathy than sex offenders but any feelings of antipathy we might have towards can not be used to justify turning a blind eye to the incorporation of ‘correctional quackery’ into our own criminal justice system:

One of the many issues to be faced, as so splendidly phrased by Gendreau, Smith and Theriault (2009), lies in ‘correctional quackery and the law of fartcatchers’. Gendreau et al. discuss how a great of practice in corrections is based on whimsy and rhetoric, rather than evidence, promoted by those in positions of authority but without the specialist knowledge to be critical of the polices and practice they endorse (see B 11.9).

————————————————————

Box 11.9 Fartcatchers and correction quackery

What is correctional quackery? Gendreau et al. (2009: 386) describe it as those ‘common sense’ fix-it cures for crime that permeate the criminal justice system: “there are a multitude of other commonsense programs that have surfaced in the media that have escaped evaluation, such as acupuncture, the angel-in-you therapy, aura focus, diets, drama therapies, ecumenical Christianity, finger painting, healing lodges, heart mapping, horticulture, a variety of humiliation strategies (e,g, diaper baby treatment, dunce cap, cross dressing, John TV, sandwich board justice, Uncle Miltie treatment), no frills prisons, pet therapy, plastic surgery, and yoga … [more instances] … such as brain injury reality, cooking/baking, dog sledding, handwriting, interior decorating in prisons (e.g. pink and teddy bear decor) classical music and ritualized tapping’.

How could such a litany of fads be allowed to happen? Again, Gendreau et al. provide an explanation, this time with reference to research showing a null effect of boot camps, of how this quackery can occur: ‘Reacting to the study (research on the lack of effectiveness of military boot camps), a spokesman for Governor George Zell Miller said that “we don’t care what the study thinks” – Georgia would continue to use its boot camps. Governor Miller is an ex-Marine, and says that the Marine boot camps he attended changed his life for the better: he believes that the boot camp experience can do the same for wayward Georgia youth … “Georgia’s Commissioner of Corrections” … also joined the chorus of condemnation, saying that academics were too quick to ignore the experiential knowledge of people “working in the system” and rely on research findings’ (quote cited from Vaughn, 1994:2).

————————————————————

The exercise of “common sense” allows scientific evidence to be dismissed in favour of anecdote, fashion and personal experience so the solution to a complex problem, such as criminal behaviour, is reduced to a single-shot, evidence-free, magic bullet. The exercise of common sense places personal values and experience above knowledge and result in expensive and potentially damaging poor practice. The ‘fartcatchers’ are those who stand downwind and allow banal practice to spread (the word originally referred to obsequious servants who, like Uriah Heep, trail after their masters with cloying humility, fawning and eager to please).

The fact that practice without an evidence base can flourish in the criminal justice system raises two immediate issues. First it calls into question the probity of those running the system: if health services allowed such unproven, unscientific practices with people with physical ailments there would, rightly, be a public outcry. Second it is inevitable that that this quackery will have no effect on offending thereby providing fuel for those who wish to return to nothing works (Cullen, Smith, Lowenkamp & Latessa, 2009)

There are serious questions to be asked here, not least that of how government policy has come to be shaped to a very large extent by the personal views and opinions of single academic/psychiatrist with an apparent penchant for cherry-picking and a worryingly shallow grasp of basic ethical and evidential standards but with the statutory instrument necessary to implement mandatory polygraph testing already before Parliament, that’s maybe a question for the lawyers to work on in court as, on an honest appraisal of the evidence, there would seem to be ample grounds for challenging the legality of such testing not just human rights ground but on questions of basic justice.

Don’t be fooled, the polygraph is not a “lie detector” – nor is it a “test”! In fact, it is nothing but a sick joke! It is an interrogation, an intimidation, an inquisition, a trial by ordeal – and the polygraph operator is the judge, jury, and executioner! If you are found guilty of lying, there is no appeal from his decision! And to make matters worse, even if you tell the complete truth you will

probably still fail! Until you take a polygraph test, you have no idea how traumatic and grueling it can be – it is that way for a reason. The polygraphers want you to be so frightened that you “spill your guts”. In fact, many people are so intimidated that they make statements that the polygrapher will use to incriminate them – some people are so frightened that they confess to things they haven’t even done!

It is FOOLISH and DANGEROUS to use the polygraph as “lie detector” – the theory of “lie detection” is nothing but junk science. It is based on a faulty scientific premise. The polygraph operators have the audacity to say that there is such a thing as a “reaction indicative of deception”, when I can prove that “lying reaction” is simply a nervous reaction commonly referred to as the fight or flight syndrome. In fact, the polygraph is nothing but a psychological billy club that is used to coerce a person into

making admissions or confessions. It is FOOLISH and DANGEROUS for the criminal justice system to rely on an instrument that has been thoroughly discredited to determine whether or not a person is truthful or deceptive, or to use it to guide their investigations in any way – especially when the

results cannot even be used as evidence in a court of law! And it is FOOLISH and DANGEROUS for anyone to believe they will pass their polygraph “test” if they just tell the truth! When you factor in all the damage done to people who are falsely branded as liars by these con men and their unconscionable conduct, this fraud of “lie detection” perpetrated by the practitioners of this insidious Orwellian instrument of torture should be outlawed! Shame on anyone who administers these “tests” – and shame on the government for continuing to allow this state sponsored sadism!