After last week’s Leveson splurge it’s time to get back to normal business and remind everyone what public interest journalism looks like via an old ‘friend’ of the Ministry that’s popped its head up above the parapet.

Benefit cheats to face lie detector tests

Council leaders have introduced lie detector tests in a bid to catch benefit fraudsters who trick them out of thousands of pounds every year.

The move has already helped anti-fraud officials catch nearly 4,000 fraudsters claiming council tax discounts and saved more than £1,400,000 in four months.

The new hi-tech system – which analyses telephone calls from claimants for signs of stress in their voices – is being run by Southwark Council in south London.

Council bosses have defended its use, saying the town hall is the 13th worst hit by Government cuts and therefore has to take a tough line on fraud.

Lie detectors were trialled by former Work and Pensions Secretary John Hutton four years ago with 24 councils across Britain. Then, two years ago, the Government abandoned the detector tests after the trials suggested they were unreliable.

But insurance companies still use the technology to catch criminals.

The ‘hi-tech system’ referred to here is, of course, Nemesysco’s Layered Voice Analysis software which I’ve written about on several occasions stretching all the way back to December 2008, including a key article on the full outcome of the Department of Work and Pensions’ trial that, unfortunately, fell down the Internet’s memory hole due to a server crash at the beginning of 2011. With this is mind, rather than barrel straight in criticism of this latest attempt to use the system to assess benefit claimants I’ve decided to go back to first principles and use the story as a springboard for a reasonably comprehensive overview of this supposed technology and its history, one that’s aimed at readers who are new to the issues.

So I’ll be explaining exactly what the system is – or rather what it claims to be – and it’s being used in practice by Southwark Council and by a number of number of insurance companies. After that we’ll look at the scientific issues and evidence and, in some detail, at the DWP’s trial for which I have the actual data that the DWP used to evaluate the system, which I obtained using the Freedom of Information Act, after which I’ll finish up by explaining the background to Capita’s involvement with the system.

What is this system and how is being used?

The term you’ll most often come across when this system is being described is ‘Voice Risk Analysis’ (VRA) and consists of two components:

– a computer software package, developed by an Israeli company called Nemesysco, which – if its developers’ claims are to be believed – is capable of detecting a wide range of emotional and cognitive states in information contained people’s speech, including stress associated with lying and giving misleading information; and,

– a series of carefully scripted questions, which are used to elicit the speech information that the software analyses.

To be absolutely clear, the system does not analyse what people say, rather it purports to identify the tell-tale signs of different emotions and thought processes in the way people response to these questions, patterns in the tone of voice and flow of speech, which are referred to as microtremors, of which people are not consciously aware.

Nemesysco calls the software component of this system, and the processing it performs, ‘Layered Voice Analysis’ (LVA), and it is claimed that this is a much more sophisticated approach to detecting information in speech than the previous generation of ‘Voice Stress Analysis’ (VSA) systems, which claimed only to be capable of detecting stress – associated, usually, with lying – in vocal information.

Although still widely used in law enforcement in the United States, claims that the older VSA-based systems were capable of detecting stress associated with lying have, in reality, been roundly debunked. Not only have speech scientists failed to find any evidence for the existence of the specific feature in speech ( the ‘Lippold Tremor’) that VSA systems are supposed to detect but an independent analysis of one of the most commonly used VSA systems found that the system did not ever operate at the frequency at which this feature is supposed to exist – all of which explains, of course, why Nemesysco goes to considerable the deny any suggestion that their LVA system is in any sense similar to the older VSA systems.

The actual process by which VRA assessments are carried out is documented, at least as far as the DWP’s trials are concerned, in a shoddily produced flowchart in the DWP’s evaluation report, which I’ll link to when we come to look at the trials themselves. However the short version of the process runs as follows:

1. Benefit claimants are ‘vetted’ to exclude those who are considered unsuitable for assessment using VRA. Those excluded at this stage include people for whom English is their second language or who require the use of a translator and some people who are elderly and/or have a disability.

2. Claimants are contacted about the claim and undergo a scripted interview, with their responses to questions being evaluated using Nemesysco’s LVA system. Based on this interview, claimants are either classified as ‘low risk’ or ‘high risk’.

3. ‘Low risk’ claimants have their claims fast-tracked through he system without the need for further verification.

4. ‘High risk’ claimants are required to provide further documentary evidence in support of their claim and may also be given a second VRA interview and/or receive a home visit to check their claim. If this results in their be reclassified as ‘low risk’ their claim is processed but if they are still considered to be ‘high risk’ then their claim is passed to fraud investigators.

Clearly, this system is heavily dependent on the VRA system providing an accurate assessment of the risk of fraud associated with each claimant. If it fails to pick up on claimants who are providing false information then those claims will be fast-tracked through the system without carrying out further checks that might reveal that fraud is taking place. On the other hand, if it erroneously flags up genuine claimants as presenting ‘high risk’ then those claimant will have to jump through a number of additional hoops to get their claim processed and, in the worst case scenarios, may find themselves under investigation as a suspected fraudster.

So the question is ‘Does the system actually work?’ and, perhaps more to the point, ‘Is it even capable of providing accurate assessments?’, which is where we begin to turn to science for out answers.

Voice Risk Analysis – What does the science say?

There are two key papers that cover the scientific background to Nemesysco’s LVA system, both of which are based on an analysis of the patents registered by Nemesysco’s founder, Amir Lieberman, which describe the ‘scientific’ foundations of the system:

– Eriksson, A. and Lacerda, F. (2007). Charlatanry in forensic speech science: A problem to be taken seriously. Int Journal of Speech, Language and the Law, 14, 169-193.

This is the paper that was infamously withdrawn from publication by the small niche journal in which it appeared after the journal’s publisher was threatened with litigation for libel by Nemesysco, and

– Lacerda, F. (2009) LVA Technology: A short analysis of a lie.

Currently, I can’t find a copy of this paper online to link to although I do have a personal copy, which was kindly supplied by Prof. Lacerda. However, a modified and somewhat less technical version of this paper is available as “LVA Technology: The illusion of lie detection“, which is written for a non-specialist audience, which I strongly recommend that you read.

Professor Francisco Lacerda also has a personal blog, which contains further information on his investigations of the LVA system, which is well worth checking out.

Eriksson and Lacerda’s findings in regards to Nemesysco’s LVA system can usefully be summarised as follows:

1. There is no known scientific theory that explains exactly how humans detect emotional content in speech and, certainly, no evidence to support the idea that a piece of computer software can perform this highly complex task with any kind of accuracy or reliability.

2. The claim, made in the patent, that this software analyses ‘intonation’ in speech is, at best, founded on a wholly superficial understanding of acoustic phonics and, at worst, is simply inaccurate.

3. The signal processing carried out by the software on the low quality samples it uses effectively strips the speech signals captured by the system for analysis of almost all their useful information, and

4. The analysis, itself, is both mathematically unsophisticated and based on nothing more than performs a few very basic statistical calculations on two arbitrarily defined types of digitisation artefacts present in the speech signal after its has been sampled and filtered to the point that much of the signal, were it to be played back to the operator, would be barely recognisable as human speech.

So the system, both literally and figuratively speaking, generates a bunch of meaningless noise from which all useful and usable speech information has been stripped, which it then crudely ‘analyses’ with a few basic statistical calculations to produce its ‘results’. As Lacerda points out in the second paper:

The essential problem of this LVA-technology is that it does not extract relevant information from the speech signal. It lacks validity. Strictly, the only procedure that might make sense is the calibration phase, where variables are initialized with values derived from the four variables above. This is formally correct but rather meaningless because the waveform measurements lack validity and their reliability is low because of the huge information loss in the representation of the speech signal used by the LVA-technology. The association of ad hoc waveform measurements with the speaker’s emotional state is extremely naive and ungrounded wishful thinking that makes the whole calibration procedure simply void.

Given these comments, its worth noting that research conducted using Nemesysco’s LVA system has, to date, only generated positive results in situations where Nemesysco, and people associated with the company, have been directly involved in the research and, particularly where researchers have relied on the company to ‘calibrate’ the system for them. On the rare occasions that it has been tested independently of Nemesysco, the system has failed to perform as expected and produced result consistent with chance – as one research noted, the system perform no better than a ‘coin flip’.

This gets particularly interesting when we consider the finding of two independent studies conducted almost six years apart.

In 2009, Gary Elkins, a post doctoral researcher working at University of Arizona’s National Centre for Border Security and Immigration, which is funded by the US Department for Homeland Security, published a paper entitled ‘Evaluating the Credibility Assessment Capability of Vocal Analysis Software‘ in which he made the following observation:

Mirroring the results of previous studies, the vocal analysis software’s built-in deception classifier performed at the chance level. However, when the vocal measurements were analyzed independent of the software’s interface, the variables FMain, AVJ, and SOS significantly differentiated between truth and deception. This suggests that liars exhibit higher pitch, require more cognitive effort, and during charged questions exhibit more fear or unwillingness to respond than truth tellers.

Perhaps – although based on Lacerda’s comments, what the system’s FMain, AVJ and SOS parameters are actually measuring is anyone’s guess.

Couple with that, however, is a 2003 report for the US Department of Defence by Brown, Senter and Ryan, which tested Nemesysco’s ‘Vericator’ system – and earlier it4eration of the current LVA system – in which the authors note that the system failed to perform as expects, which they attributed to their own ‘miscalibration’ of the system, after which they add:

A major caveat must be placed here. We used logistic regression analyses to fit our data to known and desired outcomes. This heavily biased the outcomes to yield the most favorable In order to test the derived decision algorithms’ respective accuracies without bias, a new study would have to generate new data to test the algorithms. This was well beyond our original intent and scope. Another point of concern we have with the ability to generalize these results to new data stems from the fact that the derived decision algorithms for Scripted and Field-like questioning were quite different. The Scripted algorithm used eight of the nine Raw-Values parameters while the Field-like algorithm used only four. This raises concerns that these algorithms are based upon highly variable data. As a result, cross-validation with new data is questionable.

In both cases. a post hoc analysis of the system’s raw output, the statistical ‘noise’ it generates through its invalid processing methods, turned up patterns in the data that did fit with the researcher’s known and desired outcomes.

Of course, if your dataset is large enough and noisy enough, and you’re prepared to ‘torture; it enough to find something that appear meaningful then you’ll find all manner of random correspondence from this season’s baseball averages to the closing positions of the FTSE 100 index to the answers to your kid’s maths homework. There is a whole cottage industry of biblical ‘prophecy’ based on hunting down what are supposed to be secret messages encoded in the text of the bible, all of which are, of course, nothing more than the products of random chance. Search hard enough in the noise and you’ll find patterns which seem meaningful but which are actually entirely meaningless.

The relevance of this to Nemesysco’s LVA system is clearly illustrated by an exchange of email published as appendix C in a 2006 paper by Harry Hollien and Jerry Harnsberger – another independent study in which the system failed to live up to Nemesysco’s hype. These email show staff at a company called V LLC, which was at the time the licensed vendor for Nemesysco’s system in the US, trying to influence the parameters of the trial by tossing the researcher all manner of suggested settings for ‘calibrating’ the system which were at odds with the parameters chosen by the researchers based on the documentation that they given with the software.

What is clear from this exchange is that what V LCC were doing in the background was trying to run their own simulations of the research study in order to carry out the same type of post hoc analysis that was carried out by Elkins and by Brown et al. in an effort to supply Hollien and Harnsberger with a set of ‘calibration settings’ that would rig the test in the system’s favour.

To sum up, then, the scientific evidence say that system doesn’t work and that it is, in fact, incapable of generating any kind of meaningful information about the unconscious emotional or cognitive content of speech without reporting to retrofitting the statistical noise it generates to known or desired outcomes. It’s predictive value is zero and yet it was, and still, being used in live systems to assess the honesty of benefit claimants and, indeed, people making insurance claims.

That’s enough raw science for now, time to move on to the actual DWP trials and the actual data these generated, data that DWP has never published in full.

The DWP Voice Risk Analysis Trials.

In November 2010, the DWP published its own evaluation of its trial of Voice Risk Analysis on housing benefit claims in 24 UK local authorities. The evaluation’s abstract gives the following, rather unilluminating, information about the trials:

It is estimated that the Department for Work and Pensions (DWP) overpays £3.1bn in benefit due to fraud and error. Whilst proportionally small at 2.1% of all benefit expenditure, it represents a significant cost to the taxpayer. The Department is keen to exploit new solutions to the problem of fraud and error, and Voice Risk Analysis (VRA) is reported to have been used successfully in the private sector. The Department helped to fund trials of the technology in 24 local authorities (LAs) on the processing of new claims, in-claim reviews and reported changes of circumstance to Housing Benefit (HB) which took place between August 2008 and December 2010. This report details the evaluation of the trials and the resulting conclusions drawn.

There are one or two things that the DWP doesn’t mention in the abstract that are worth flagging up.

First, there were 39 separate trials in local authorities, in total, including the seven that took place in the initial pilot phase of the study, spread across three different type of housing benefit claim; renewals, notified changes in circumstances and new claims. However only 31 of these 40 trials reporting usable data back to the DWP – at least according to the dataset I was sent following an FOI request, which I received on the same day that the evaluation report was published.

Second, there were twenty-six local authorities involved in the trials, of which five failed to provide any usable system performance data, although in one case (Windsor and Maidenhead) I did obtain interim performance data direct from the local authority six month into its trial, data that, somewhat curiously, failed to match the limited amount of information that the DWP did supply about that particular trial. According to the DWP’s dataset, Windsor and Maidenhead carried out only 297 VRA calls during its entire trial, of which 13 were classified ‘high risk’. However, according to the same council, based on its returns to the DWP, it carried out 403 VRA calls in the first six months of its trial, of which 17 were classified ‘high risk’ – clearly some of the trial data has gone ‘walkabout’ somewhere along the line for reasons which remain unexplained.

Then there was the 40th trial, a pilot phase trial conducted by Jobcentre Plus on JSA claimants that appears to have been omitted from the final evaluation report – for the record, Jobcentre Plus backed out of phase 2 after its phase 1 results proved to be rather disappointing.

So, even before we start there are some data quality issues.

The evaluation report offers up the following summary of its results:

Twelve local authorities tested VRA on in-claim reviews with one strong positive and six weak positive results.

Two local authorities tested VRA on changes in circumstance with one strong positive and one weak positive result.

Nine local authorities tested VRA at new claims stage with three strong positive and three weak positive results.

Before going on to put forward the following conclusions:

The evaluation was intended to determine whether VRA worked when applied to the benefit system. From our findings it is not possible to demonstrate that VRA works effectively and consistently in the benefits environment. The evidence is not compelling enough to recommend the use of VRA within DWP. At no stage did the evaluation of management information carried out by the Department explicitly consider the effectiveness of the technological aspects of VRA. However, social research evidence suggests that the scripting and training elements of the trial were successful.

The trial appeared to suggest it is operationally difficult to sufficiently monitor the success of VRA. Any risk based verification solution such as VRA would require on-going independent monitoring during live running to ensure the robustness of the process.

So far, so good, but then suddenly things go rapidly downhill, thanks to the DWP’s failure to consider what its results might have to say about the ‘technological aspects of VRA’, i.e. Nemesysco’s LVA system.

The failure to find compelling evidence of discrimination does not mean that the process could not work in other environments, as this is one trial conducted in a complex operational environment. The trial focussed on what an operational deployment would mean to DWP in terms of potential benefits and risks. The focus on operational deployment – an assessment of whether the VRA process ‘worked’ in LAs and therefore potentially have a role across DWP- meant that certain areas for exploration, such as the reliability of operators’ judgements of risk, and the science behind the technology were not part of the scope of the trial. Therefore, this trial is more about whether it could work rather than how or why it works.

No, one failed trial at the DWP does not indicate that the system absolutely could not work in other environments but, on an honest appraisal of the scientific evidence, it does add further weight to Eriksson and Lacerda view that Nemesysco’s LVA system is a complete and utter lemon and, in that context, it is well worth pointing out that, in total, the DWP spent over £2 million on running 18 months worth of trials on a system for which it had not undertaken a proper technical evaluation. I actually put this issue directly to the DWP via an FOI request and got the following response:

The Department is conducting its own research into the efficacy of Voice Risk Analysis based on trials by Local Authorities and Jobcentre Plus. Whilst there is a body of published research on Voice Risk Analysis, there is no published research on the efficacy of the technology when applied to benefit claims. Additionally, the system as tested by the Department and Local Authorities combines the VRA technology with intelligent questioning and a process redesign.

There’s a distinctly homoeopathic feel to that answer – never mind the evidence that it’s a crock, if we keep trying it maybe we’ll find something it does work on. In all it’s rather like sending an expedition to a newly discovered island just to be sure that rocks don’t fall upwards there, unlike everywhere else.

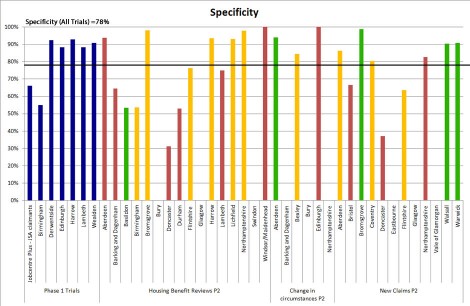

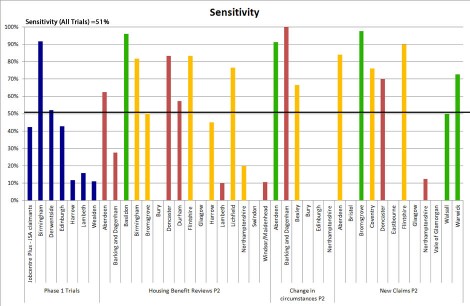

So that’s the DWP’s rather shabby take on its own research, now for a much clearly picture of the issues, and to try and keep things simple we’ll just focus on two of the more important statistical metrics one can generate from the trial data, the sensitivity and specificity of these systems during the trial, together with the reliability of their performance across different site.

To do that, we need to define a few terms, so:

– Sensitivity, in this context, means how well the system did in performing it primary task; that of flagging up people who deliberately gave false or misleading information about their benefit claim during the VRA interview. The higher the sensitivity of the system during the trial, the more people it correctly identified as having given false information, flagging them as high risk claimants and, by the same token, the fewer people it wrongly identified a high risk claimants when they’d actually given accurate information.

– Specificity, as you might well imagine, measure how accurately the system performed when identifying claimants as low risk, so the higher the score, the more honest claimants it let through onto the fast track. This of course means that the lower the score the more people who gave false information had the claims fast-tracked without further checks although, during the trial itself, a randomly chosen sample of just under 10% of low risk claimants were given the same follow-up checks as high risk claimants in order to assess the effectiveness of the system.

So, what the DWP were ideally looking for were high scores for both sensitivity and specificity, particularly specificity as a low score there would mean that the system was failing to identify people who had given false information, putting their benefit claims on the fast track. And there were also looking for the system to perform reliability across different trial sites, i.e. that all, or at least a majority of site, produced quite similar results for both sensitivity and specificity – a system that catches fraudsters 90% of the time in one area, but only 10% of the time in another is clearly of no use to the DWP as any cost savings that can be made from fas-tracking low risk claims will quickly be eaten up by the costs of constantly monitoring the performance of these systems to ensure that they are working correctly in all areas.

To make things easier, I’ve pulled the sensitivity and specificity scores for each trial site and type of trial that returned usable data into two column graphs, one showing the sensitivity scores with the other showing specificity. I’ve also colour coded the trials – the blue bars are the pilot sites, the green bars are sites which gave the DWP a strong positive result, yellow bars a weak positive result and red bars are sites where the system failed to produce a significant result, i.e. one better than chance alone would predict – and added a black horizontal line to each chart showing the combined sensitivity (or specificity) for all trials.

Last, and by no means least, you can click on each of graphs to see a larger version of the image – so here are the two graphs.

So what can we say about these two charts?

Well first, and most obviously, there are very significant differences in performance between different trial sites on the sensitivity chart, where the best performing trial produced a sensitivity above 90% while the worst has sensitivity in the 10-15% range – so there is a very obvious reliability problem here.

On the specificity chart the scores at different site fall into a narrower range – with the exception to two trials conducted in Doncaster, all score were above 50% but that still quite a wide variation between different sites.

Overall, the combined data for all trials gave sensitivity of just 51%, so one of every two ‘high risk’ claimants identified by the system and made subject to follow-up checks was actually a perfectly honest claimant. As for specificity, the combined trial values across all trials was 78%, when means that just over one out of every five claimants who were identified as ‘low risk’ and who would, were this not a trial, have had their claim fast-tracked without further detailed checks actually gave false information. Throw in the reliability issues, which mean that councils using the system today have no way of knowing how well the system is, or isn’t performing without at least carrying out follow-up checks on a decent sized sample of ‘low risk’ claimants – defeating one of the key objects of the system, that of cutting costs by cutting down on the number of checks on claimants and all the bureaucracy that goes with it, and you can easily see why the DWP decided that whole thing had been a failure and chose to back away from the system.

Unfortunately, the one thing it didn’t do is make its reasons for backing off absolutely clear in its evaluation, leaving the way clear for Capita to continue hawking this useless system to local authorities – and as for Capita’s explanation of the system’s failure to perform reliably, although the company has never said anything publicly, I did speak privately to council staff who had been involved in the trials and was told, quite clearly, that the company had blamed the staff using the system for its failure to perform adequately and reliably rather than take a long hard look at what speech scientists and other i8ndependent researchers have had to say about Nemesysco’s LVA system.

What about the claims made in press?

So, bearing all that in mind, what are we to make of Southwark’s claim that its Voice Risk Assessment system has caught nearly 4,000 Council Tax fraudsters, saving the council more than £1.4 million in just four months?

Well, first, we don’t know what proportion of these claimed savings comes from reduced administration costs arising from fast-tracking claimants identified by the system as low risk, and what proportion may be down claimants either being caught committing fraud or revising/withdrawing their claim voluntarily in order to avoid being caught. The average saving that council is claiming is £350 per claimant, based on the figure of 4,000 alleged fraudsters, which is actually close to the value of the single person discount on a band E property in Southwark (£1489.72 including the GLA element) – there are currently around 13,500 band E properties in the borough, which account for 10.6% of the total housing stock.

That said, the figures could easily include overpayments recovered for false claims submitted in previous years, which would bump up the savings quite a bit but, at the same time, the Neighbourhood statistics datasets for Southwark should that there were just under 40,000 single person households in the area in 2010, so we’re look at at 1 in 10 single person discount claims being fraudulent, which is high by anyone’s standards.

Without more information, it’s difficult to say for certain whether or not these figures stand up. What is clear, from the trial data and media reports during the trials, is that councils using this system have a history of overclaiming on the system results.

In 2008, for example, the Guardian reported that the phase 1 trial in Lambeth had caught nearly 400 fraudster fiddling their benefit claims, yet the validated data from the trial supplied by the DWP shows that during the whole phase 1 trial, only 192 claimants with overpayments were identified, only 29 of which were actually classified by the system as high rick claimants.

Harrow was, for quite some time, the flagship trial site in the eyes of the press and, in May 2008, the specialist technology news site ‘The Inquirer’ reported claimed cost savings of £420,000 in phase 1. By February 2009, The Times were reporting savings of ‘over £336,000’ but by the middle of March of the same year, the Guardian were being told that Harrow Council has actually saved on £110,000 in benefits. What the DWP’s dataset shows is that over both phases, the total number of validated claimants with overpayments for the area came in at just 90 claims of which only 16 had been flagged as high risk. As for why the figure changed so much over the course of just 10 months, that is perhaps best explained by some of the assumptions that the council were making back in May 2008, which The Inquirer reported as follows:

The figure was calculated on the assumption that 132 people who refused to complete the voice-risk analysis assessment would otherwise have tried to cheat the system; and that 500 people who, though they had been flagged as low risk, had declared their personal circumstances had changed and no longer needed benefits would also have otherwise attempted to cheat the system.

Some of these people may well have withdrawn their claims or declared a change in circumstances in order to avoid being caught out but others, particular those declaring a change in circumstance may genuinely have had a change in circumstances shortly before being contacted by the council about their claims.

What this illustrates is perhaps the only genuinely working feature of this system, what psychologists call ‘the bogus pipeline effect’.

Put simply, if you can con people who have been, or are intending to commit fraud, into thinking that you do have a working lie detector then at least some them with think twice about trying it on, at the risk of being caught, and either alter or withdraw their claim. This all, of course, relies heavily on people buying into the idea that the system actually works, which may go some way towards explaining why councils using are keen to make unvalidated claims and, at least for the time being, unverified public claims about the number of frauds being identified and the size of any associated cost saving. Not only is it good PR but, if it helps to create the impression that the system works then it may increase the prospects of the council benefiting from the bogus pipeline effect.

Whether you regard that as an ethical approach to fraud reduction is another matter entirely.

That leaves us just one final issue to cover.

What is Capita’s role in promoting this system?

This is easily explained in only you can follow the money.

Nemesysco own the patents and copyright on the software system used to carry out the Voice Risk Analysis checks and has an exclusive licence agreement with a British company, Digilog UK, to market and supply the system in the UK.

Digilog UK originally had its own exclusive 10 year deal with an insurance claims investigation company called Brownsword Ltd, which gave Brownsword the sole right to market and deploy Nemesysco’s software system in the public sector, a licence agreement that Capita acquired, in 2004, when it bought Broadsword from its previous owner, Isis Equity Partners, for a reported £8.5 million.

So Capita owns the exclusive rights to use Nemesysco’s system in its UK public sector contracts under a deal which, had the DWP trial proved successful, would have put the company on the fast track to a near monopoly over any outsourced benefit claim processing contracts in the UK because it, and only it, had the rights to use the Nemesysco software, the only part of the entire that – because it’s patented – has any real commercial value.

This is, perhaps, the most ironic element of this whole sorry story. Of the two core components of the Voice Risk Analysis system used in the DWP trials, the only one that has any scientific validity is the scripted interviews – there is a solid body of published research on the psychology of, in particular, witness interviews in criminal justice settings, on which the scripted questions used in Voice Risk Analysis are based but, in purely commercial terms, this scripting has very little value attached to it. All the relevant research is already readily accessible in papers published in scientific journal, so much so that any competitor looking for a way into this particular market could easily develop their own scripting from first principles at not much more than the cost of hiring a halfway decent psychologist to do the work.

Only when the scripting is tied into Nemesysco’s patented software system is there any commercial value, or advantage, in this system because the chain of licensing deals, from Nemesysco to Digilog UK and on to Capita means that only Capita has access to both the software and scripting and so, as long as Capita can sell local authorities the idea that the system might actually work, it has an advantage over its competitors when bidding for contracts and an opportunity to try and recoup some of the £6.5 million is laid out in 2004 when acquiring the rights to what is, in reality, a complete and utter steaming pile of worthless pseudoscientific bullcrap.

Conclusion.

So that’s more or less the full picture and to sum up:

1. Voice Risk Analysis doesn’t work because one of the two components of the system is based on nothing more than a bunch of pseudoscientific nonsense with no credible foundations in actual speech science.

2. The DWP’s £2 million trial was failure, not least because it was poorly designed and implemented from the outset, failed to include a proper technical evaluation of the software system used to evaluate claimant interviews and because the DWP chose to disregard scientific evidence which shows the system to be a lemon. Then, to compound matters further, the DWP bottled it completely when they wrote up their evaluation, leaving the way clear for Capita to continue hawking the system around the public sector despite the trial, itself, having provided clear evidence of the system’s unreliability.

3. If you happen to be a councillor on one of the councils that is currently using this system – and Southwark is not the only council using it to assess claims for the single person’s Council Tax discount – then, unless your council is routinely carrying out its own random follow-up checks on supposedly low-risk claimants, as identified by the system, then you have absolutely no way of actually monitoring the system’s performance and – worse still – no way of knowing just exactly how many fraudulent benefit claims are being fast-tracked through the system without adequate checks being carried out on the back of unreliable assessments delivered by this system.

So, next you’re handed the performance data for this system, just remember that out of out of just over 5,600 ‘low risk’ claimants who underwent proper follow-up checks during the DWP’s trial, a little over 1 in 5 were found to have been overpaid on their claim while, in the worst performing councils in the trial, as many as 9 out of 10 claimants classified as high risk turned out to have submitted perfectly genuine claims.

In fact, of the 1634 people flagged as high risk and whose claims were actually fully investigated during the phase 2 trials, around 1 in 19 were actually found to have been underpaid on their claim after further investigations had been carried out.

There were also a further 1390 ‘high risk’ claimants that received no follow-up by the end of the trial, many – according to staff who worked on these system during the trials – because it was absolutely obvious that the claimant was genuine, regardless of what the system said. From what I’ve been told, privately, a significant portion of the ‘high risk’ claimants who weren’t investigated were elderly people on fixed incomes who had neither means nor the opportunity to even try and defraud the system.

18 thoughts on “Capita still peddling pseudoscience to local authorities”